The global Autonomous Mobile Robot (AMR) market is expected to reach $4.1 billion by 2028 thanks in large part to the rapid advances in robotics and artificial intelligence. The United States represents the fastest-growing market for AMRs, with increased adoption in manufacturing, logistics, retail, health care and e-commerce.

Today’s AMRs come equipped with advanced technology, allowing them to perceive, understand and navigate their surroundings, detect obstacles and make decisions in real time to avoid collisions. It’s this obstacle detection and avoidance that allows robots to safely work alongside humans in a wide variety of sectors.

In this blog post, we’ll take a closer look at obstacle detection and avoidance — and what it means for robot and human interaction in the workplace now and in the future.

What Is Obstacle Detection and Avoidance?

Obstacle detection and avoidance refer to the capability of a robot to understand its surroundings and navigate around obstacles in real-time. But it’s not just about preventing collisions — though that’s a big part of it. It’s about empowering robots to make sense of their surroundings and make decisions based on their learning that allow them to operate safely and efficiently.

A Brief History of AMR Obstacle Avoidance

In 1949, neurobiologist William Grey Walter developed two rudimentary robots he called Elmer and Elsie, which are now considered the first biologically inspired and behavior-based robots. Walter outfitted the tortoise-shaped robots with light and bump sensors that allowed them to navigate around obstacles.

In the 1960s, Stanford University made technological strides in robotics with the introduction of Shakey, the first mobile robot that could perceive its surroundings and reason about its actions. Shakey was equipped with whisker-like bump sensors, a TV camera, an infrared triangulating rangefinder, and an antenna for two-way radio communication, which it used to map its surroundings and decide on a path.

These and other early innovations were foundational in developing today’s AMRs that can navigate safely through unpredictable environments.

How Do AMRs Detect and Avoid Obstacles?

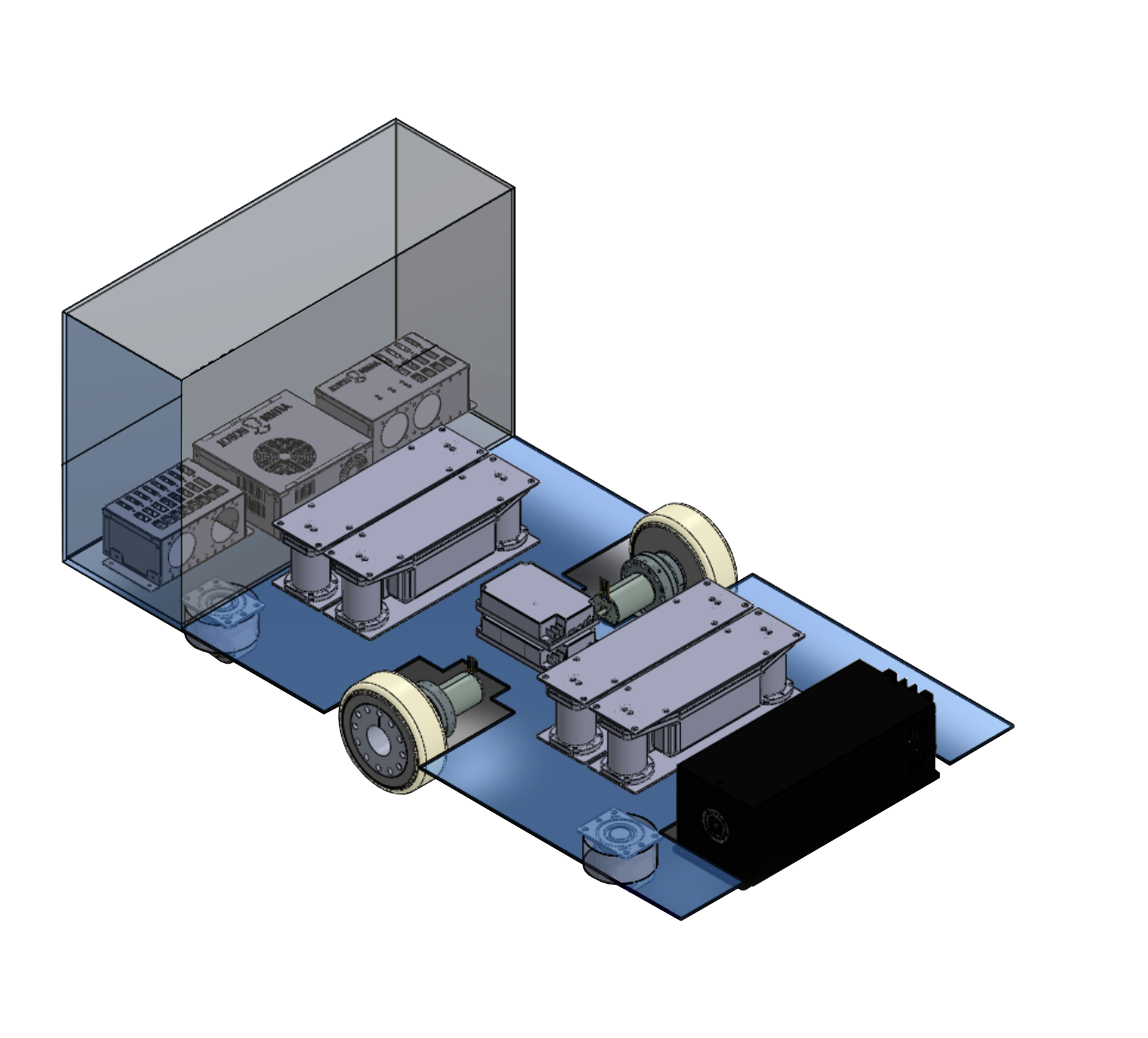

In the decades since bump and light sensors were considered cutting-edge, the technology that enables obstacle detection and avoidance has evolved dramatically. Today’s AMRs are equipped with sophisticated sensors that constantly gather data about the robot’s surroundings and build a real-time understanding of the environment. The most commonly used sensors include:

- LiDAR. LiDAR, or light detection and ranging, is a sensor technology that uses lasers to measure distance. Sensors emit laser pulses and then calculate the time it takes for the pulses to bounce back to create an accurate representation of the environment.

- 3D LiDAR. This advanced sensor technology breaks out of the horizontal plane to scan and collect location data on the X, Y and Z axes.

- Cameras. Cameras allow AMRs to “see” their environment. In Shakey’s case, the mounted TV camera allowed it to reorient itself if it got lost. More sophisticated cameras found in today’s AMRs use advanced imaging to capture, analyze and interpret visual data.

- Radar. These sensors use electromagnetic radio waves to judge the distance to objects, allowing the AMR to detect and avoid them.

- Ultrasonic. Similar to radar, ultrasonic sensors detect objects using sound waves.

Most AMRs do not rely on a single sensor, instead integrating data from various sources. AMRs rely on this integration — called sensor fusion — to make informed decisions about how to navigate any given space and to accurately detect and avoid obstacles.

Interpreting Sensor Data for Obstacle Avoidance

Sensing obstacles is one thing, but the real intelligence of AMRs lies in their ability to interpret sensor data and react. This real-time processing is made possible through simultaneous localization and mapping (SLAM) technology.

SLAM is a process by which a robot builds a map of its surroundings while simultaneously keeping track of its own location within that map. This is done by taking data from the robot’s sensors and applying algorithms to process that data into a usable map. One of the key advantages of SLAM is that it doesn‘t require any prior knowledge of the environment to create an accurate map.

As the data pours in from LiDAR and other sensors, the AMR makes an up-to-the-moment judgment call on how to react to various changes in the environment in order to complete the required task.

Together, SLAM and sensor technologies like LiDAR allow AMRs to navigate their surroundings safely and efficiently while detecting and avoiding obstacles.

The Importance of Obstacle Detection and Avoidance in AMRs

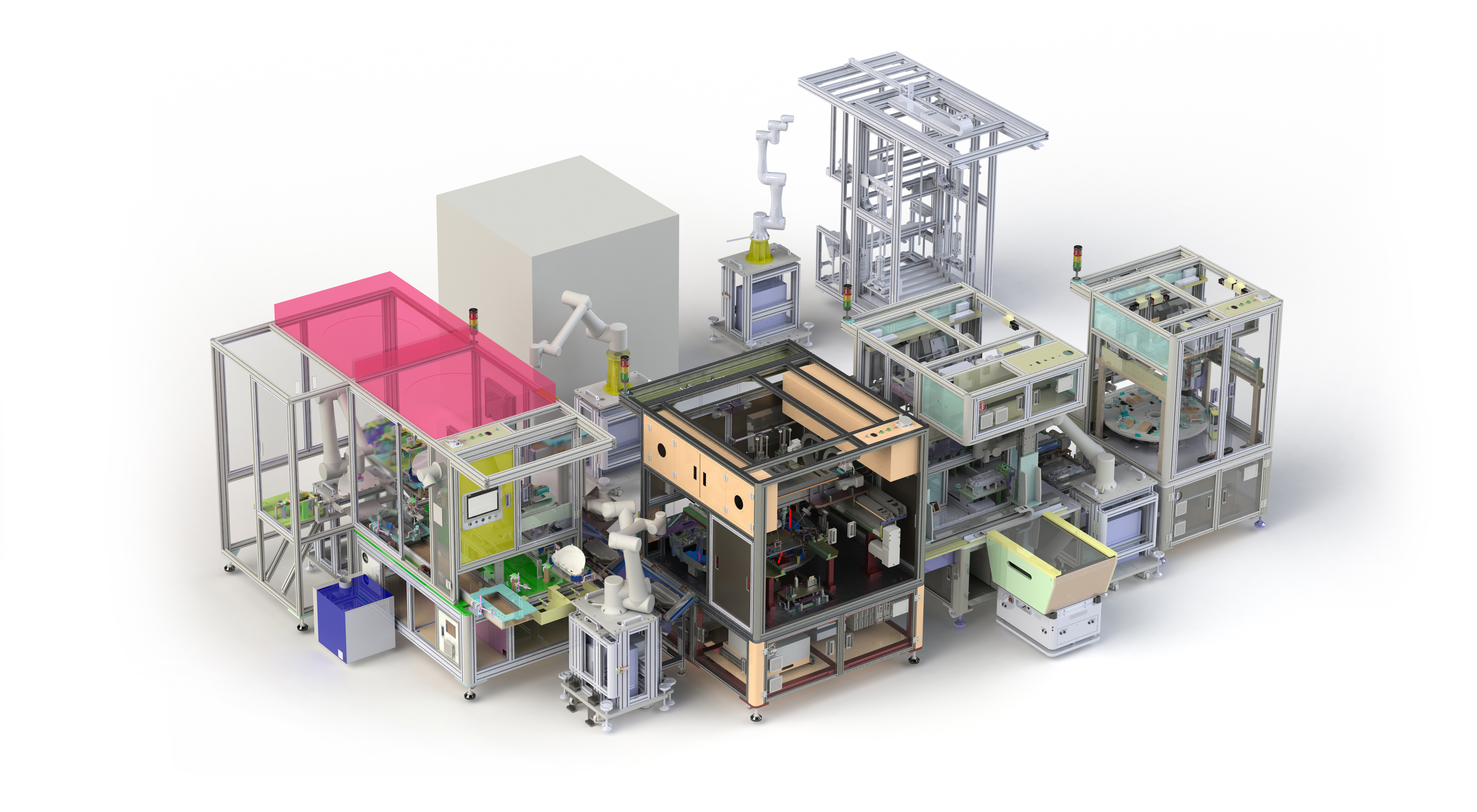

As the number of AMRs in workplaces increases, obstacle detection becomes even more important. Not only do sophisticated detection and avoidance systems allow robots to work seamlessly alongside their human counterparts, but there are also advantages of obstacle-avoiding robots.

Safety. In the early days of industrial robots, the machines were housed in cages to protect human workers from their powerful and dangerous moving parts. Today, robots work among us. To make this possible, safety standards have emerged from bodies like the International Organization for Standards (ISO) that ensure AMRs can navigate busy workplaces without endangering human coworkers.

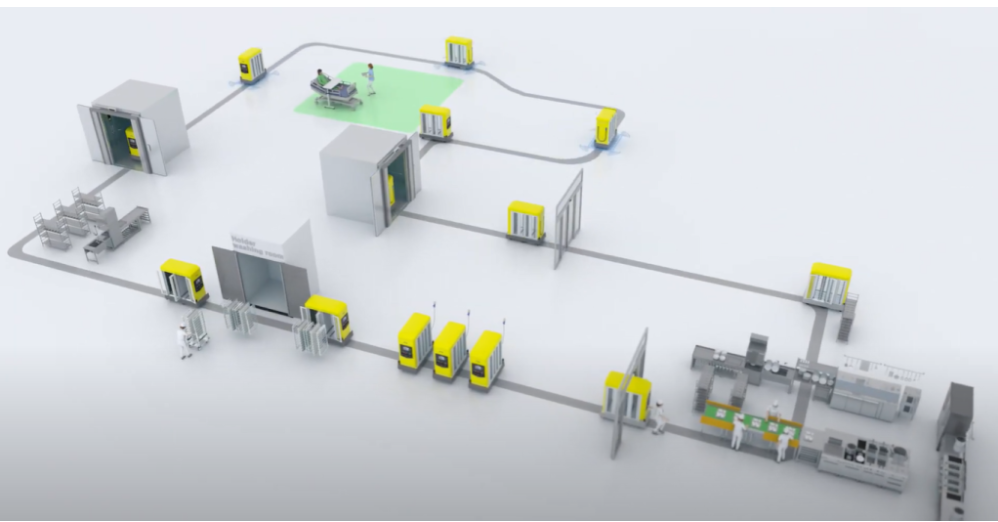

AMRs can navigate crowded corridors, ride elevators and provide essential services. They can also reduce workplace injuries by taking on tasks that pose risks to humans, like lifting heavy loads or entering contaminated spaces.

Efficiency. Beyond making spaces safer, AMRs can enhance efficiency and productivity, reduce downtime and operate around the clock as needed to meet company demand.

Obstacle Avoidance vs. Collision Avoidance: What’s the Difference?

Both automated guided vehicles (AGVs) and AMRs can detect obstacles in their paths.

AGVs operate using basic guidance mechanisms like magnetic tracks, allowing them to move along pre-mapped paths within a facility. If there is something in the way, an AGV will halt or reroute based on programmed instructions. Often, a human will need to move the obstacle in order for the robot to continue its work. This is best described as collision avoidance.

AMRs also have collision avoidance, enabling them to safely come to a stop before running into an unexpected object. The primary difference between collision avoidance and obstacle avoidance is what happens after.

AGVs can avoid a collision but can’t interpret or adapt to their surroundings dynamically. AMRs, equipped with sophisticated sensors, can encounter an obstacle, prevent a collision, reroute and then continue the task the same way a human worker would.

The Future of AMRs at Work

As obstacle detection and avoidance continue to improve, thanks to better sensor technology and advancements in AI and machine learning, AMRs will make their way into more industries and applications than ever. This will not only increase productivity and safety but also open the door for even more sophisticated human-robot collaboration, propelling innovation and creativity to new heights.